Application and Server Lifecycle¶

Finatra establishes an ordered lifecycle when creating a c.t.app.App or a c.t.server.TwitterServer and provides methods which can be implemented for running or starting core logic.

This is done for several reasons:

To ensure that flag parsing and module installation to build the object graph is done in the correct order such that the injector is properly configured before a user attempts attempts to access flags.

Ensure that object promotion and garbage collection is properly handled before accepting traffic to a server.

Expose any external interface before reporting a server is “healthy”. Otherwise a server may report it is healthy before binding to a port — which may fail. Depending on how monitoring is configured (typically done by monitoring the HTTP Admin Interface /health endpoint on some frequency) it could be some interval before the server is recognized as unhealthy when in fact it did not start properly as it could not bind to a port.

Thus you do not have access to the App

or TwitterServer

main() method. Instead, any logic should be contained in overriding an @Lifecycle-annotated

method or in the application or server callbacks.

Caution

If you override an @Lifecycle-annotated method you MUST first call

super.lifecycleMethod() in your override to ensure that framework lifecycle events happen

accordingly.

Choosing between TwitterServer and App¶

See the TwitterServer v. App comparison chart to decide between an App or TwitterServer.

See the Creating an injectable App and Creating an injectable TwitterServer sections for more information.

c.t.app.App Lifecycle¶

Finatra servers are, at their base, c.t.app.App applications. Therefore it will help to first cover the c.t.app.App lifecycle.

When writing a c.t.app.App, you extend the c.t.app.App trait and place your application logic inside of a public main() method.

import com.twitter.app.App

class MyApp extends App {

val n = flag("n", 100, "Number of items to process")

def main(): Unit = {

for (i <- 0 until n()) process(i)

}

}

c.t.app.App provides ways to tie into it’s lifecycle by allowing the user to register logic for different lifecycle phases. E.g., to run logic in the init lifecycle phase, the user can call the init function passing a callback to run. App collects every call to the init function and will run the callbacks in the order added during the init lifecycle phase.

import com.twitter.app.App

class MyApp extends App {

init {

// initialization logic

}

premain {

// logic to run right before main()

}

def main(): Unit = {

// your application logic here

}

postmain {

// logic to run right after main()

}

onExit {

// closing logic

}

closeOnExitLast(MyClosable) // final closing logic

}

Additional hooks are premain, postmain, onExit and closeOnExitLast(), as shown above.

The lifecycle could thus be represented:

App#main() -->

App#nonExitingMain() -->

bind LoadService bindings

run all init {} blocks

App#parseArgs() // read command line args into Flags

run all premain {} blocks

run all defined main() methods

run all postmain {} blocks

App#close() -->

run all onExit {} blocks

run all Closables registered via closeOnExitLast()

c.t.inject.app.App Lifecycle¶

The Finatra c.t.inject.app.App extends the c.t.app.App lifecycle by adding more structure to the defined main() method.

The lifecycle for a Finatra “injectable” App c.t.inject.app.App can be described:

App#main() -->

App#nonExitingMain() -->

bind LoadService bindings

run all init {} blocks

App#parseArgs() // read command line args into Flags

run all premain {} blocks

c.t.inject.app.App#main() -->

load/install modules

modules#postInjectorStartup()

postInjectorStartup()

warmup()

beforePostWarmup()

postWarmup()

afterPostwarmup()

modules#postWarmupComplete()

register application started

c.t.inject.app.App#run()

run all postmain {} blocks

App#close() -->

run all onExit {} blocks

run all Closables registered via closeOnExitLast()

For more information on creating an “injectable” App with Finatra, see the documentation here.

c.t.server.TwitterServer Lifecycle¶

c.t.server.TwitterServer is an extension of c.t.app.App and thus inherits the c.t.app.App lifecycle, but adds the ability to include “warmup” lifecycle phases which are just a refinement of the defined main() phase of the c.t.app.App lifecycle. That is, the c.t.server.Lifecycle.Warmup trait exposes two methods, prebindWarmup and warmupComplete.

These methods are provided for the user to call when they make sense typically at points in the user defined main() method before awaiting on the external interface.

The idea being that within your user defined main() method you may want to have logic to warmup the server before accepting traffic on any defined external interface. By default the prebindWarmup method attempts to run a System.gc in order to promote objects to old gen (in an attempt to incur a GC pause before your server accepts any traffic).

Users then have a way to signal that warmup is done and the server is now ready to start accepting traffic. This is done by calling warmupComplete().

To add these phases, users would mix-in the c.t.server.Lifecycle.Warmup trait into their c.t.server.TwitterServer extension.

c.t.inject.server.TwitterServer Lifecycle¶

Finatra defines an “injectable” TwitterServer, c.t.inject.server.TwitterServer which itself is an extension of c.t.server.TwitterServer and the Finatra “injectable” App, c.t.inject.app.App.

The Finatra “injectable” TwitterServer, c.t.inject.server.TwitterServer mixes in the c.t.server.Lifecycle.Warmup trait by default to ensure that warmup is performed where most applicable and further refines the warmup lifecycle as described in the next section.

For more information on creating an “injectable” TwitterServer with Finatra, see the documentation here.

Server Startup Lifecycle¶

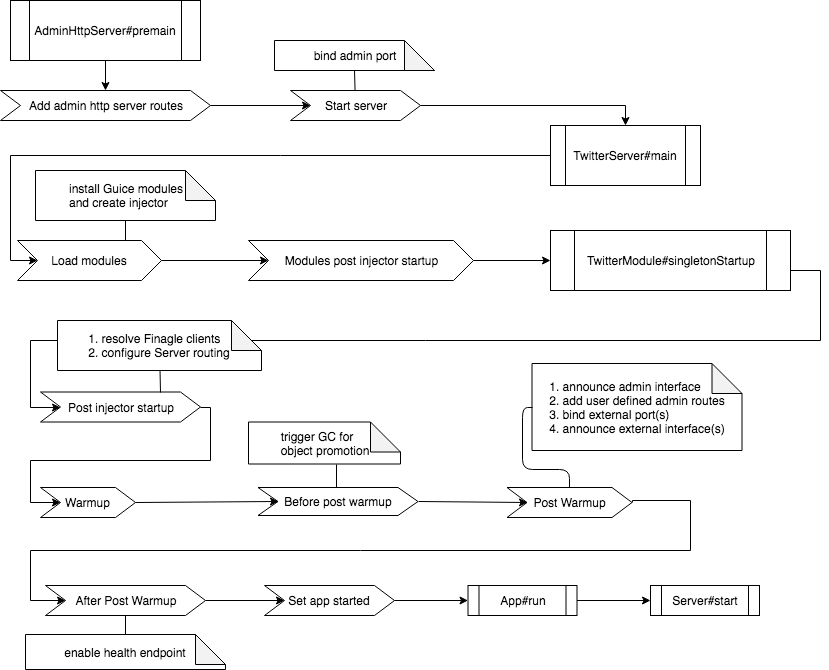

Finatra servers inherit the c.t.app.App lifecycle and, as mentioned, also mix-in the TwitterServer c.t.server.Lifecycle.Warmup trait. On top of that, Finatra further refines the lifecycle by adding more defined phases. These phases all run within a defined main() and thus in the “main” c.t.app.App lifecycle phase and is intended to ensure that the underlying dependency injection framework is properly instantiated, all Twitter Util Flags are properly parsed, external interfaces are properly bound and the application is correctly started with minimal intervention needed on the part of the implementor.

In text, at a high-level, the start-up lifecycle of a Finatra server looks like:

App#main() -->

App#nonExitingMain() -->

bind LoadService bindings

run all init {} blocks

App#parseArgs() // read command line args into Flags

run all premain {} blocks -->

add routes to TwitterServer AdminHttpServer

bind interface and start TwitterServer AdminHttpServer

c.t.inject.server.TwitterServer#main() -->

c.t.inject.app.App#main() -->

load/install modules

modules#postInjectorStartup()

postInjectorStartup() -->

resolve finagle clients

setup()

warmup()

beforePostWarmup() -->

Lifecycle#prebindWarmup()

postWarmup() -->

announce TwitterServer AdminHttpServer interface

bind external interfaces

announce external interfaces

afterPostwarmup() -->

Lifecycle#warmupComplete()

modules#postWarmupComplete()

register application started

c.t.inject.app.App#run() -->

c.t.inject.server.TwitterServer#start()

block on awaitables

run all postmain {} blocks

App#close() -->

run all onExit {} blocks

run all Closables registered via closeOnExitLast()

Visually:

Server Shutdown Lifecycle¶

Upon graceful shutdown of an application or a server, all registered onExit, closeOnExit, and closeOnExitLast blocks are executed. See c.t.app.App#exits and c.t.app.App#lastExits.

For a server, this includes closing the TwitterServer HTTP Admin Interface and shutting down and closing all installed modules. For extensions of the HttpServer or ThriftServer traits this also includes closing any external interfaces.

Important

Note that the order of execution for all registered onExit and closeOnExit blocks is not guaranteed as they are executed on graceful shutdown roughly in parallel. Thus it is up to implementors to enforce any desired ordering.

For example, you have code which is reading from a queue (via a “subscriber”), transforming the data, and then publishing (via a “publisher”) to another queue. When the main application is exiting you most likely want to close the “subscriber” first to ensure that you transform and publish all available data before closing the “publisher”.

Assuming, that both objects are a c.t.util.Closable type, a simple way to close them would be:

closeOnExit(subscriber)

closeOnExit(publisher)

However, the “subscriber” and the “publisher” would close roughly in parallel which could lead to data inconsistencies in your server if the “subscriber” is still reading before the “publisher” has closed.

Ordering onExit and closeOnExit functions?¶

Assuming, that the #close() method of both returns Future[Unit], e.g. like a c.t.util.Closable, a way of doing this could be:

onExit {

Await.result(subscriber.close(defaultCloseGracePeriod))

Await.result(publisher.close(defaultCloseGracePeriod))

}

where the defaultCloseGracePeriod is the c.t.app.App#defaultCloseGracePeriod function.

In the above example we simply await on the #close() of the “subscriber” first and then the #close() of the “publisher” thus ensuring that the “subscriber” will close before the “publisher”.

However, we are not providing a timeout to the Await.result, which we should ideally do as well since we do not want to accidentally block our server shutdown if the defaultCloseGracePeriod is set to something high or infinite (e.g., Time.Top).

But if we don’t know the configured value of the defaultCloseGracePeriod this makes things complicated. We could just hardcode a value for the Await, or not use the defaultCloseGracePeriod:

onExit {

Await.result(subscriber.close(defaultCloseGracePeriod), 5.seconds)

Await.result(publisher.close(defaultCloseGracePeriod), 5.seconds)

}

...

onExit {

Await.result(subscriber.close(4.seconds), 5.seconds)

Await.result(publisher.close(4.seconds), 5.seconds)

}

However, this is obviously not ideal and there is an easier way. You can enforce the ordering of closing Closables by using closeOnExitLast.

A c.t.util.Closable passed to closeOnExitLast will be closed after all onExit and closeOnExit functions are executed. E.g.,

closeOnExit(subscriber)

closeOnExitLast(publisher)

In this code the “publisher” is guaranteed be closed after the “subscriber”.

Note

All the exit functions: onExit, closeOnExit, and closeOnExitLast use the defaultCloseGracePeriod as their close “deadline” and will raise a TimeoutException if all the exits (collected onExit, closeOnExit functions) do not close within the deadline. And if the lastExits (collected closeOnExitLast functions) do not close within the deadline.

If you have multiple c.t.util.Closable objects you want to close in parallel and one you want to close after all the others, you could do:

closeOnExit(subscriberA)

closeOnExit(subscriberB)

closeOnExit(subscriberC)

closeOnExitLast(publisher)

The “publisher” is guaranteed be closed after the closing of “subscriberA”, “subscriberB”, and “subscriberC”.

What to do if you don’t have a c.t.util.Closable?¶

You can simply use the onExit block to perform any shutdown logic, or you can wrap a function in a c.t.util.Closable to be passed to closeOnExit or closeOnExitLast.

For example:

onExit {

DatabaseConnection.drain()

Await.result(someFutureOperation, 2.seconds)

}

closeOnExit {

Closable.make { deadline =>

prepWork.start()

anotherFutureOperation

}

}

closeOnExitLast {

Closable.make { deadline =>

queue.blockingStop(deadline)

Future.Unit

}

}

You can also wrap multiple functions in a Closable:

closeOnExit {

Closable.make { deadline =>

database.drain()

fileCleanUp.do()

pushData(deadline)

Future.Unit

}

}

Again the code in onExit and closeOnExit will be run in parallel and guaranteed to close before the functions in closeOnExitLast.

Note

Multiple closeOnExitLast Closables will be closed in parallel with each other but after all onExit and closeOnExit functions have closed.

Modules¶

Modules provide hooks into the Lifecycle as well that allow instances being provided to the object graph to be plugged into the overall application or server lifecycle. See the Module Lifecycle section for more information.

More Information¶

As noted in the diagram in the Startup section the lifecycle or an application can be non-trivial – especially in the case of a TwitterServer.

For more information on how to create an injectable c.t.app.App or a c.t.server.TwitterServer see the Creating an injectable App and Creating an injectable TwitterServer sections.